normalization of Stock data in reinforcement learning

Normalization of Stock data for stock Market prediction using Reinforcement Learning

Introduction

So let’s first discuss what is normalization and why it is required. Normalization is a preprocessing technique used to scale numerical data to a standard range, typically between 0 and 1, or with a mean of 0 and a standard deviation of 1. It aims to bring features onto a similar scale, which can aid in improving the performance and convergence of machine learning algorithms, including reinforcement learning. do find depp understanding of feature scaling go to wikipedia

Why we need Normalization of stock data

In the field of Stock Market prediction using reinforcement learning, normalization is crucial for several reasons:

- Equalizing Feature Scales: Stock data often consists of various features with different scales, such as stock prices, volumes, and indicators. Normalization ensures that these features are on a similar scale, preventing one feature from dominating the learning process due to its larger scale.

- Improved Convergence: Reinforcement learning algorithms converge faster when features are normalized. By scaling the data, the optimization process becomes more efficient, leading to quicker convergence and more stable learning dynamics.

- Enhanced Generalization: Normalization can aid in the generalization of learned policies across different stocks or market conditions. By normalizing the data, the model learns patterns that are more transferable, leading to improved performance on unseen data.

Example:

Consider a simplified example where we have two features: stock price and trading volume. The stock price ranges from 50 to 200, while the trading volume ranges from 100,000 to 1,000,000. Without normalization, the scale difference between these features can lead to biased learning. However, by normalizing both features to the range [0, 1], such as using min-max scaling, we ensure that both features are treated equally during training.

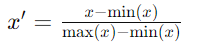

Mathematically, the normalized value x ′of a feature can be calculated as:

Pros of normalization of Stock Data:

- Improved Convergence: Normalization speeds up the convergence of reinforcement learning algorithms by bringing features onto a similar scale.

- Stabilized Training: Normalization stabilizes the training process, preventing large fluctuations in learning dynamics and improving overall stability.

- Enhanced Generalization: Normalization aids in the generalization of learned policies across different stocks or market conditions, leading to more robust predictions.

Cons of normalization of Stock Data:

- Loss of Interpretability: Normalization can result in a loss of interpretability, as the transformed data might not directly correspond to the original stock prices or volumes.

- Risk of Overfitting: Normalization might increase the risk of overfitting, especially if the normalization parameters are learned from the training data, leading to poorer performance on unseen data.

- Additional Complexity: Normalization introduces additional complexity and computational overhead, which could be undesirable in resource-constrained environments or real-time prediction scenarios.

In conclusion, while normalization plays a vital role in improving the performance and convergence of reinforcement learning models for stock market prediction, it’s essential to carefully consider its pros and cons and select appropriate normalization techniques based on the specific requirements and characteristics of the problem domain.

Below are some good normalization technique which we can use:

- Min- max scalling

- Z-Score( Standardization)

- Robust Scalling

- Log Transformation

- Normalization by Total Volume

- Exponential Moving Average (EMA) Normalization

read more about reinforcement learning -> click here

Keep learning, Keep Exploring – DataSciInsight 🙂

- How to Download Stock Data Using Interactive Brokers – 2024 - July 8, 2024

- Exploring the Best Python Libraries for Machine Learning – 2024 - April 20, 2024

- What is ElegantRL - April 11, 2024

Leave a Reply